Real Time Streaming Video/Audio

ESP32 / ESP32-CAM

ILI9341 LCD Display & Touch

Sound sensor

Arduino Code for esp32 ¶

Copy paste code below to a new scetch, compile and run¶

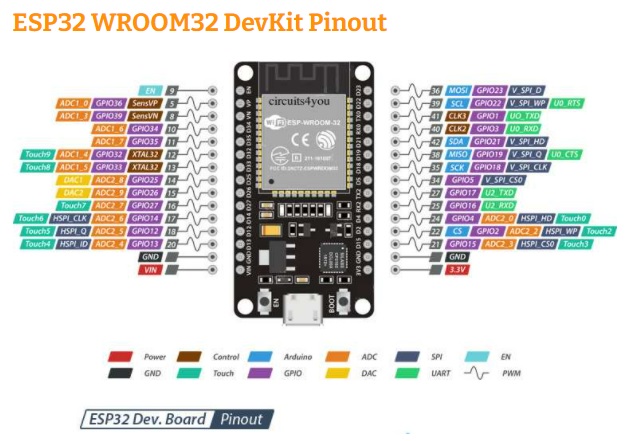

Notes: ESP32 has no built in mic like Arduino Nano Sens 33 so I used a **GY-MAX4466** mic wired to GPIO PIN 32 of esp32 devkit v1 developement card

Data will be sent through UDP Wifi packets of 1000 bytes to your PC

Every 2 seconds when blue led</sapn> is on the "command" is recorded and sent, sampling rate is 4KHz so we will ignore >2KHz frequencies

Data are coded on byte (unsigned char) 8bits

NB : To read this format on I2S you will need to convert on 16 bits format and 8 Khz min sample rate.

#include <Arduino.h>

#include "soc/soc.h" // Disable brownour problems

#include "soc/rtc_cntl_reg.h" // Disable brownour problems

#include <WiFi.h>

#include <WiFiClient.h>

#include <WiFiUdp.h>

#include "esp_task_wdt.h"

#ifdef ARDUINO_ARCH_ESP32

#include "platglue-esp32.h"

#else

#include "platglue-posix.h"

#endif

#define PinSound 32

unsigned long intervalMicros = 250; //us 4000 Hz

unsigned long nextMicros = 0;

int sending;

IPAddress peer = IPAddress(192,168,111,2);

// IPAddress masterip,otherip,myIP;

byte packetBuffer[1500]; //buffer to hold incoming and outgoing packets

IPAddress masterip,otherip,myIP;

byte odd=0;

byte reboot_count=0;

// Set these to your desired credentials.

const char *ssid = "tuto";

const char *password = "tuto";

WiFiUDP udp2;

// WiFiUDP udp2;

long currentSession;

int soundon=0;

byte soutbuf[4000];

int writebuf=0;

int outbuf=0;

int nb=0;

void setup() {

WRITE_PERI_REG(RTC_CNTL_BROWN_OUT_REG, 0); //disable brownout detector

Serial.begin(115200);

// sleep(2);

Serial.print("Initializing");

pinMode(PinSound,INPUT);

pinMode(2,OUTPUT);

digitalWrite(2,LOW);

esp_task_wdt_init(1800,false);

esp_task_wdt_add(NULL);

Serial.println("Init HDP");

initap();

udp2.begin(11674);

nextMicros = micros();

Serial.println("Ok");

}

void initap()

{

Serial.println();

Serial.println("Configuring access point...");

WiFi.disconnect(true);

// You can remove the password parameter if you want the AP to be open.

WiFi.softAP(ssid, password);

myIP = WiFi.softAPIP();

delay(100);

Serial.println("Set softAPConfig");

IPAddress Ip(192, 168, 111, 1);

IPAddress NMask(255, 255, 255, 0);

WiFi.softAPConfig(Ip, Ip, NMask);

myIP = WiFi.softAPIP();

Serial.print("AP IP address: ");

Serial.println(myIP);

}

void checknet2()

{

int dsize, av;

String cmd;

byte dst[1500];

udp2.parsePacket();

if ((dsize=udp2.read(dst,1500)) > 0) {

Serial.println("command receid speak");

soundon=1;

digitalWrite(2,HIGH);

}

if(outbuf >= writebuf + 1000)

{

udpsocketsend(&udp2,soutbuf+writebuf,1000, peer,11674);

writebuf+=1000;

Serial.println("+");

if(writebuf>=4000)

{

writebuf=0;

// Serial.println("writ 0");

}

}

}

void loop() {

unsigned long currentMicros = micros();

if(currentMicros < nextMicros )

{

// yield();

return;

}

nextMicros = currentMicros + intervalMicros;

if(soundon==1){

int level = analogRead(PinSound);

level = map(level, 0, 4095, 0, 255);

if(outbuf>=4000)

{

Serial.println("outbuf 0");

outbuf=0;

soundon=0;

digitalWrite(2,LOW);

}

soutbuf[outbuf]=level;

// Serial.println(level);

outbuf++;

}

checknet2();

yield();

}

Thread for udp reading¶

Packets sent on 11674 udp port

import wave

import time

import sys

import numpy as np

import socket

import time

import datetime

from threading import Thread

from os.path import basename

import asyncio

import matplotlib.pyplot as plt

import sounddevice as sd

import numpy as np

sock=None

soundin=bytearray(4000)

index=0 filenb=0 filename="gauche"

def traiter(pload,ip,port):

global index

global soundin

global filenb

global filename

#print("received from",ip,port," datasize ",len(pload),"index=",index)

soundin[index:index+1000]=pload[0:1000]

index+=1000

if index >=4000:

index=0

file = open(str(filename)+str(filenb)+".raw", "wb")

file.write(soundin)

file.flush()

file.close()

print("file writen : ", str(filename)+str(filenb)+".raw","index=",index)

filenb+=1

class UdpRec(Thread):

def __init__(self, lettre):

Thread.__init__(self)

self.lettre = lettre

def run(self):

global sock

asyncio.set_event_loop(asyncio.new_event_loop())

print("Running UdpRec 1")

UDP_IP = "192.168.111.2"

UDP_PORT = 11674

sock = socket.socket(socket.AF_INET, socket.SOCK_DGRAM)

sock.bind((UDP_IP, UDP_PORT))

while True:

data, addr = sock.recvfrom(1500) # buffer size is 1024 byte

#print("received",data)o

ip=addr[0]

port=addr[1]

finalip=ip+":"+str(port)

if(len(data)<100):

print("Something wrong or ack",data)

else:

traiter(data,ip,port)

try:

print("start Udp thread")

th1=UdpRec("th1");

th1.start()

except:

print("Error")

Then run this cell for every command¶

msg = "hello"

msgb=bytes(msg,'utf-8')

for i in np.arange(50):

sock.sendto(msgb,('192.168.111.1',11674))

time.sleep(2)

print("OK")

include <stdlib.h>

include <stdint.h>

// here sin values from to 90° are stored as multiple of 255

unsigned char isin_data[128]=

{0, 1, 3, 4, 5, 6, 8, 9, 10, 11, 13, 14, 15, 17, 18, 19, 20,

22, 23, 24, 26, 27, 28, 29, 31, 32, 33, 35, 36, 37, 39, 40, 41, 42,

44, 45, 46, 48, 49, 50, 52, 53, 54, 56, 57, 59, 60, 61, 63, 64, 65,

67, 68, 70, 71, 72, 74, 75, 77, 78, 80, 81, 82, 84, 85, 87, 88, 90,

91, 93, 94, 96, 97, 99, 100, 102, 104, 105, 107, 108, 110, 112, 113, 115, 117,

118, 120, 122, 124, 125, 127, 129, 131, 133, 134, 136, 138, 140, 142, 144, 146, 148,

150, 152, 155, 157, 159, 161, 164, 166, 169, 171, 174, 176, 179, 182, 185, 188, 191,

195, 198, 202, 206, 210, 215, 221, 227, 236};

uint16_t Pow2[14]={1,2,4,8,16,32,64,128,256,512,1024,2048,4096};

unsigned char RSSdata[20]={7,6,6,5,5,5,4,4,4,4,3,3,3,3,3,3,3,2,2,2};

// functions declaration

int16_t fast_sine(int16_t Amp, int16_t th);

int16_t fastRSS(int16_t a, int16_t b);

int16_t fast_cosine(int16_t Amp, int16_t th);

// fft function

void imff(int32_t *in,int32_t N,int32_t *out)

{

int16_t a,c1,f,o,x,data_max,data_min=0;

int32_t data_avg,data_mag,temp11;

unsigned char scale,check=0;

data_max=0;

data_avg=0;

data_min=0;

int16_t allz=1;

for(int16_t i=0;i<N;i++)

if(in[i])

allz=0;

if(allz)

return;

for(int16_t i=0;i<12;i++) //calculating the levels

{

if(Pow2[i]<=N)

{

o=i;

}

}

a=Pow2[o];

int16_t out_r[a]; //real part of transform

int16_t out_im[a]; //imaginory part of transform

for(int16_t i=0;i<a;i++) //getting min max and average for scalling

{

out_r[i]=0; out_im[i]=0;

data_avg=data_avg+in[i];

if(in[i]>data_max)

{

data_max=in[i];

}

if(in[i]<data_min)

{

data_min=in[i];

}

}

data_avg=data_avg>>o;

scale=0;

data_mag=data_max-data_min;

temp11=data_mag;

//scalling data from +512 to -512

if(data_mag>1024)

{

while(temp11>1024)

{

temp11=temp11>>1;

scale=scale+1;

}

}

if(data_mag<1024)

{

while(temp11<1024)

{

temp11=temp11<<1;

scale=scale+1;

}

}

if(data_mag>1024)

{

for(int16_t i=0;i<a;i++)

{

in[i]=in[i]-data_avg;

in[i]=in[i]>>scale;

}

scale=128-scale;

}

if(data_mag<1024)

{

scale=scale-1;

for(int16_t i=0;i<a;i++)

{

in[i]=in[i]-data_avg;

in[i]=in[i]<<scale;

}

scale=128+scale;

}

x=0;

for(int16_t b=0;b<o;b++) // bit reversal order stored in im_out array

{

c1=Pow2[b];

f=Pow2[o]/(c1+c1);

for(int16_t j=0;j<c1;j++)

{

x=x+1;

out_im[x]=out_im[j]+f;

}

}

for(int16_t i=0;i<a;i++) // update input array as per bit reverse order

{

out_r[i]=in[out_im[i]];

out_im[i]=0;

}

int16_t i10,i11,n1,tr,ti;

float e;

int16_t c,s,temp4;

for(int16_t i=0;i<o;i++) //fft

{

i10=Pow2[i]; // overall values of sine/cosine

i11=Pow2[o]/Pow2[i+1]; // loop with similar sine cosine

e=1024/Pow2[i+1]; //1024 is equivalent to 360 deg

e=0-e;

n1=0;

for(int16_t j=0;j<i10;j++)

{

c=e*j; //c is angle as where 1024 unit is 360 deg

while(c<0){c=c+1024;}

while(c>1024){c=c-1024;}

n1=j;

for(int16_t k=0;k<i11;k++)

{

temp4=i10+n1;

if(c==0) {tr=out_r[temp4];

ti=out_im[temp4];}

else if(c==256) {tr= -out_im[temp4];

ti=out_r[temp4];}

else if(c==512) {tr=-out_r[temp4];

ti=-out_im[temp4];}

else if(c==768) {tr=out_im[temp4];

ti=-out_r[temp4];}

else if(c==1024){tr=out_r[temp4];

ti=out_im[temp4];}

else{

tr=fast_cosine(out_r[temp4],c)-fast_sine(out_im[temp4],c); //the fast sine/cosine function gives direct (approx) output for A*sinx

ti=fast_sine(out_r[temp4],c)+fast_cosine(out_im[temp4],c);

}

out_r[n1+i10]=out_r[n1]-tr;

out_r[n1]=out_r[n1]+tr;

if(out_r[n1]>15000 || out_r[n1]<-15000){check=1;} //check for int16_t size, it can handle only +31000 to -31000,

out_im[n1+i10]=out_im[n1]-ti;

out_im[n1]=out_im[n1]+ti;

if(out_im[n1]>15000 || out_im[n1]<-15000){check=1;}

n1=n1+i10+i10;

} // for int16_t k=

}// for int16_t j=

if(check==1)

{ // scalling the matrics if value higher than 15000 to prevent varible from overflowing

for(int16_t i=0;i<a;i++)

{

out_r[i]=out_r[i]>>1;

out_im[i]=out_im[i]>>1;

}

check=0;

scale=scale-1; // tracking overall scalling of input data

}

} //for int16_t i=

if(scale>128)

{

scale=scale-128;

for(int16_t i=0;i<a;i++)

{

out_r[i]=out_r[i]>>scale;

out_im[i]=out_im[i]>>scale;

}

scale=0;

} // revers all scalling we done till here,

else

{

scale=128-scale;

} // in case of nnumber getting higher than 32000, we will represent in as multiple of 2^scale

for(int16_t i=0;i<a;i++)

{

out[i]=out_r[i];

out[i+N]=out_im[i];

}

}

//---------------------------------fast sine/cosine---------------------------------------//

int16_t fast_sine(int16_t Amp, int16_t th)

{

int16_t temp3,m1,m2;

unsigned char temp1,temp2, test,quad,accuracy;

accuracy=5; // set it value from 1 to 7, where 7 being most accurate but slowest

// accuracy value of 5 recommended for typical applicaiton

while(th>1024)

{

th=th-1024;

} // here 1024 = 2*pi or 360 deg

while(th<0)

{

th=th+1024;

}

quad=th>>8;

if(quad==1){th= 512-th;}

else if(quad==2){th= th-512;}

else if(quad==3){th= 1024-th;}

temp1= 0;

temp2= 128; //2 multiple

m1=0;

m2=Amp;

temp3=(m1+m2)>>1;

Amp=temp3;

for(int16_t i=0;i<accuracy;i++)

{

test=(temp1+temp2)>>1;

temp3=temp3>>1;

if(th>isin_data[test])

{

temp1=test;

Amp=Amp+temp3;

m1=Amp;

}

else

if(th<isin_data[test])

{

temp2=test;

Amp=Amp-temp3;

m2=Amp;

}

}

if(quad==2)

{

Amp= 0-Amp;

}

else

if(quad==3)

{

Amp= 0-Amp;

}

return Amp;

}

int16_t fast_cosine(int16_t Amp, int16_t th)

{

th=256-th; //cos th = sin (90-th) formula

return(fast_sine(Amp,th));

}

//--------------------------------------------------------------------------------//

//--------------------------------Fast RSS----------------------------------------//

int16_t fastRSS(int16_t a, int16_t b)

{

if(a==0 && b==0)

{

return(0);

}

int16_t min,max,temp1,temp2;

unsigned char clevel;

if(a<0)

{

a=-a;

}

if(b<0)

{

b=-b;

}

clevel=0;

if(a>b)

{

max=a;

min=b;

}

else

{

max=b;

min=a;

}

if(max>(min+min+min))

{

return max;

}

else

{

temp1=min>>3; if(temp1==0){temp1=1;}

temp2=min;

while(temp2<max)

{

temp2=temp2+temp1;

clevel=clevel+1;

}

temp2=RSSdata[clevel];

temp1=temp1>>1;

for(int16_t i=0;i<temp2;i++)

{

max=max+temp1;

}

return max ;

}

}

compile to shared C libarary to use into python code:¶

!cc -fPIC -shared -o imfft.so im.c

To compute spectrograme we compute amplitude of comlexe fft numbers int unint32¶

**C code to fast compute square root on MCU from miscroship

#define unsigned short ushort

ushort SQRTint(unsigned long *pvalue)

{

unsigned long value = *pvalue;

ushort sqrt = 0;

ushort bitShift;

for(bitShift = 0x8000; bitShift > 0; bitShift >>= 1)

{

ushort trial = sqrt | bitShift;

unsigned long tmp = __builtin_muluu(trial, trial);

if(tmp <= value)

{

sqrt = trial;

}

}

return sqrt;

}

import matplotlib.pyplot as plt

import numpy as np

import copy

import time

import ctypes

from ctypes import *

#!pip install tensorflow==2.2

test=np.arange(16)

#test=np.ones(16,dtype=np.int32);

test=test.astype(np.int32)

test=test.ctypes.data_as(POINTER(ctypes.c_int32))

xx = CDLL('imspec.so')

xx.imff.argtypes = POINTER(c_int32),c_size_t,POINTER(c_int32)

xx.imff.restype = None

out = (c_int32*32)()

inn = (c_int16*32)()

ts=time.time_ns()

xx.imff(test,16,out)

te=time.time_ns()

print(te-ts) # check execution time for performance in nzno seconds for optimisation

#print(list(test))

print(list(out))

testnp=np.int32(out)

171452 [24, 17, 10, 8, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

def imfft(inn, N):

N2=np.int32(N*2)

inn=inn.astype(np.int32)

inn=inn.ctypes.data_as(POINTER(c_int32))

out = (ctypes.c_int32*N2)()

xx.imff(inn,N,out)

outnp=np.int32(out[:N//4])

return outnp

ts=time.time_ns()

test=np.arange(128)

te=time.time_ns()

print(te-ts)

tt=imfft(test,128)

print(tt)

print(tt.shape)

53882 [1318 1079 585 408 312 249 210 189 163 146 131 121 115 104 101 101 94 87 82 80 76 73 71 71 70 68 66 66 65 63 64 68] (32,)

Following code is a custumisation of from https://www.tensorflow.org/tutorials/audio/simple_audio¶

Offical Tensor Flow tuto¶

FFTT¶

# pip install tensorflow==2.3

import os

import pathlib

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

import tensorflow as tf

from tensorflow.keras import layers

from tensorflow.keras import models

from IPython import display

# Set the seed value for experiment reproducibility.

seed = 42

tf.random.set_seed(seed)

np.random.seed(seed)

print(tf.__version__)

2.2.0

Optional : Check if GPU is available ¶

print("Num GPUs Available: ", len(tf.config.list_physical_devices('GPU')))

Num GPUs Available: 0

2022-04-03 18:48:46.344056: W tensorflow/stream_executor/platform/default/dso_loader.cc:55] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /usr/local/cuda/lib64: 2022-04-03 18:48:46.344083: E tensorflow/stream_executor/cuda/cuda_driver.cc:313] failed call to cuInit: UNKNOWN ERROR (303) 2022-04-03 18:48:46.344100: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (feynmannode01.cluster.local): /proc/driver/nvidia/version does not exist

DATASET_PATH = 'data/imds'

data_dir = pathlib.Path(DATASET_PATH)

commands = np.array(tf.io.gfile.listdir(str(data_dir)))

commands = commands[commands != 'README.md']

print('Commands:', commands)

Commands: ['avance' 'recule' 'stop' 'droite' 'gauche']

filenames = tf.io.gfile.glob(str(data_dir) + '/*/*')

filenames = tf.random.shuffle(filenames)

num_samples = len(filenames)

print('Number of total examples:', num_samples)

print('Number of examples per label:',

len(tf.io.gfile.listdir(str(data_dir/commands[0]))))

print('Example file tensor:', filenames[0])

Number of total examples: 165 Number of examples per label: 35 Example file tensor: tf.Tensor(b'data/imds/recule/recule15.raw', shape=(), dtype=string)

train_files = filenames[:120]

val_files = filenames[120: 140]

test_files = filenames[-25:]

print('Training set size', len(train_files))

print('Validation set size', len(val_files))

print('Test set size', len(test_files))

Training set size 120 Validation set size 20 Test set size 25

print(train_files[0])

print(str(train_files[0].numpy()))

print(train_files[0].numpy().decode('utf-8'))

tf.Tensor(b'data/imds/recule/recule15.raw', shape=(), dtype=string) b'data/imds/recule/recule15.raw' data/imds/recule/recule15.raw

Check Our Data

parts = tf.strings.split( input=train_files[0], sep=os.path.sep)

print(parts)

audio_binary = tf.io.read_file('data/imds/avance/avance25.raw')

type(audio_binary )

#print(audio_binary)

npa=audio_binary.numpy()

npa=np.frombuffer(npa,dtype='uint8')

npa.shape

print(npa)

tf.Tensor([b'data' b'imds' b'recule' b'recule15.raw'], shape=(4,), dtype=string) [103 114 113 ... 111 112 110]

bbb = tf.io.read_file('data/imds/avance/avance25.raw',tf.string)

#print(bbb)

#im=tf.io.serialize_tensor(bbb)

iim=tf.io.decode_raw( bbb, np.uint8, little_endian=True, fixed_length=None, name=None)

print(iim)

tf.Tensor([103 114 113 ... 111 112 110], shape=(4000,), dtype=uint8)

Get Row Data and the label from the file name function

def get_waveform_and_label(file_path):

parts = tf.strings.split(

input=file_path,

sep=os.path.sep)

print(file_path)

#label = get_label(file_path)

audio_binary=tf.io.read_file(file_path)

#audio_binary = np.fromfile(file_path.numpy().decode('utf-8'), dtype='uint8')

waveform = tf.io.decode_raw( audio_binary, np.uint8, little_endian=True, fixed_length=None, name=None)

return waveform, parts[-2]

following is available in 2.8 version

# AUTOTUNE = tf.data.AUTOTUNE

#AUTOTUNE = tf.data.AUTOTUNE

files_ds = tf.data.Dataset.from_tensor_slices(train_files)

train_ds = files_ds.map(

map_func=get_waveform_and_label)

Tensor("args_0:0", shape=(), dtype=string)

val_ds = tf.data.Dataset.from_tensor_slices(val_files)

val_ds = files_ds.map(

map_func=get_waveform_and_label)

Tensor("args_0:0", shape=(), dtype=string)

test_ds = tf.data.Dataset.from_tensor_slices(test_files)

test_ds = files_ds.map(

map_func=get_waveform_and_label)

Tensor("args_0:0", shape=(), dtype=string)

Let's display and check some files

rows = 3

cols = 3

n = rows * cols

fig, axes = plt.subplots(rows, cols, figsize=(10, 12))

for i, (audio, label) in enumerate(train_ds.take(n)):

r = i // cols

c = i % cols

ax = axes[r][c]

ax.plot(np.frombuffer(audio,dtype='uint8'))

ax.set_yticks(np.arange(0, 255, 40))

label = label.numpy().decode('utf-8')

ax.set_title(label)

plt.show()

Functions To Compute Spectrogram

Voice samples are used to generate spectrograms (30x32) values for each file, we can use it as 1 channel image data with conv2D layers for better results¶

def im_stft(signal, frame_length=128, frame_step=64):

sig=np.uint8(signal)

#sig=np.frombuffer(signal,dtype='uint8')

nbsamples=2024 # np.size(signal)

nbsteps=np.uint8((nbsamples-frame_length)/frame_step)

spec_out=np.ndarray(shape=(nbsteps,np.int16(frame_length/4)), dtype=np.int32)

for i in np.arange(nbsteps):

#com=np.fft.fft(signal[i*frame_step:i*frame_step+frame_length] ) #Approx_FFT(signal[i*frame_step:i*frame_step+frame_length],frame_length,1)

#cc=out_r+1j*out_im

spec_out[i]=imfft(sig[i*frame_step:i*frame_step+frame_length],frame_length)

ret=tf.convert_to_tensor (spec_out)

return ret

def get_im_spectrogram(waveform):

spectrogram = im_stft(waveform[1000:3024], frame_length=128, frame_step=64)

# Obtain the magnitude of the STFT.

#spectrogram = tf.abs(spectrogram)

# Add a `channels` dimension, so that the spectrogram can be used

# as image-like input data with convolution layers (which expect

# shape (`batch_size`, `height`, `width`, `channels`).

spectrogram = spectrogram[..., tf.newaxis]

return spectrogram

for waveform, label in train_ds.take(1):

label = label.numpy().decode('utf-8')

spectrogram = get_im_spectrogram(waveform)

print('Label:', label)

print('Waveform shape:', waveform.shape)

print('Spectrogram shape:', spectrogram.shape)

print('Audio playback')

display.display(display.Audio(waveform[1000:3024], rate=4000))

Label: recule Waveform shape: (4000,) Spectrogram shape: (29, 32, 1) Audio playback

def plot_spectrogram(spectrogram, ax):

spectrogram=tf.cast(spectrogram,dtype=tf.float64)

if len(spectrogram.shape) > 2:

assert len(spectrogram.shape) == 3

spectrogram = np.squeeze(spectrogram, axis=-1)

# Convert the frequencies to log scale and transpose, so that the time is

# represented on the x-axis (columns).

# Add an epsilon to avoid taking a log of zero.

log_spec = np.log(spectrogram.T + np.finfo(float).eps)

height = log_spec.shape[0]

width = log_spec.shape[1]

X = np.linspace(0, np.size(spectrogram), num=width, dtype=int)

Y = range(height)

ax.pcolormesh(X, Y, log_spec)

fig, axes = plt.subplots(2, figsize=(12, 8))

timescale = np.arange(waveform.shape[0])

axes[0].plot(timescale, waveform)

axes[0].set_title('Waveform')

axes[0].set_xlim([0, 4000])

plot_spectrogram(spectrogram.numpy(), axes[1])

axes[1].set_title('Spectrogram')

plt.show()

spectrogram.shape

TensorShape([29, 32, 1])

'''

def get_spectrogram(waveform):

# Zero-padding for an audio waveform with less than 16,000 samples.

waveform = tf.cast(waveform, dtype=tf.float32)

spectrogram = tf.signal.stft(

waveform, frame_length=128, frame_step=64)

# Obtain the magnitude of the STFT.

spectrogram = tf.abs(spectrogram)

# Add a `channels` dimension, so that the spectrogram can be used

# as image-like input data with convolution layers (which expect

# shape (`batch_size`, `height`, `width`, `channels`).

spectrogram = spectrogram[..., tf.newaxis]

return spectrogram

'''

'\ndef get_spectrogram(waveform):\n # Zero-padding for an audio waveform with less than 16,000 samples.\n waveform = tf.cast(waveform, dtype=tf.float32)\n spectrogram = tf.signal.stft(\n waveform, frame_length=128, frame_step=64)\n # Obtain the magnitude of the STFT.\n spectrogram = tf.abs(spectrogram)\n # Add a `channels` dimension, so that the spectrogram can be used\n # as image-like input data with convolution layers (which expect\n # shape (`batch_size`, `height`, `width`, `channels`).\n spectrogram = spectrogram[..., tf.newaxis]\n return spectrogram\n '

Maintenant, définissez une fonction qui transforme l'ensemble de données de forme d'onde en spectrogrammes et leurs étiquettes correspondantes en identifiants entiers :¶

aa=(label == commands)

print(aa)

[False False False False True]

def immaxarg(aa):

i=0

for a in aa.numpy():

if a==True:

return i

i+=1

return i

def get_spectrogram_and_label_id(audio, label):

spectrogram = get_im_spectrogram(audio)

#label_id = tf.math.argmax(label == commands)

label_id=immaxarg(label == commands)

return spectrogram, np.int32(label_id)

for waveform, label in train_ds.take(1):

spectrogram,lab = get_spectrogram_and_label_id(waveform,label)

print(type(lab))

print(type(spectrogram))

print(lab.shape)

print(spectrogram.shape)

print("lab",lab.dtype)

print("spec", spectrogram.dtype)

<class 'numpy.int32'> <class 'tensorflow.python.framework.ops.EagerTensor'> () (29, 32, 1) lab int32 spec <dtype: 'int32'>

sp=[]

lb=[]

for waveform, label in train_ds:

spectrogram,lab = get_spectrogram_and_label_id(waveform,label)

sp.append( spectrogram )

lb.append( lab)

spectrogram_train_ds = tf.data.Dataset.from_tensor_slices((sp, lb))

sp=[]

lb=[]

for waveform, label in val_ds:

spectrogram,lab = get_spectrogram_and_label_id(waveform,label)

sp.append( spectrogram )

lb.append( lab)

spectrogram_val_ds = tf.data.Dataset.from_tensor_slices((sp, lb))

sp=[]

lb=[]

for waveform, label in test_ds:

spectrogram,lab = get_spectrogram_and_label_id(waveform,label)

sp.append( spectrogram )

lb.append( lab)

spectrogram_test_ds = tf.data.Dataset.from_tensor_slices((sp, lb))

get_spectrogram_and_label_id sur les éléments de l'ensemble de données avec Dataset.map :

#spectrogram_ds=tf.data.Dataset.from_generator(genspec, (tf.int32, tf.int64))

'''

ot = (tf.int32, tf.int64)

os = (tf.TensorShape([60, 64, 1]), tf.TensorShape([]))

spectrogram_ds = tf.data.Dataset.from_generator(genspec,

output_types = ot,

output_shapes = os)

#ds = ds.batch(BATCH_SIZE)

'''

'\not = (tf.int32, tf.int64)\nos = (tf.TensorShape([60, 64, 1]), tf.TensorShape([]))\nspectrogram_ds = tf.data.Dataset.from_generator(genspec, \n output_types = ot, \n output_shapes = os)\n#ds = ds.batch(BATCH_SIZE)\n'

#sess.as_default()

#spectrogram_ds = waveform_ds.map(

# map_func=get_spectrogram_and_label_id)

'''def get_spectrogram_and_label(file_path):

parts = tf.strings.split(

input=file_path,

sep=os.path.sep)

print(file_path)

#label = get_label(file_path)

audio_binary=tf.io.read_file(file_path)

#audio_binary = np.fromfile(file_path.numpy().decode('utf-8'), dtype='uint8')

waveform = tf.io.decode_raw( audio_binary, np.uint8, little_endian=True, fixed_length=None, name=None)

return get_im_spectrogram(waveform.numpy()[0]), parts[-2]

'''

"def get_spectrogram_and_label(file_path):\n parts = tf.strings.split(\n input=file_path,\n sep=os.path.sep)\n print(file_path)\n #label = get_label(file_path)\n audio_binary=tf.io.read_file(file_path)\n #audio_binary = np.fromfile(file_path.numpy().decode('utf-8'), dtype='uint8')\n waveform = tf.io.decode_raw( audio_binary, np.uint8, little_endian=True, fixed_length=None, name=None)\n return get_im_spectrogram(waveform.numpy()[0]), parts[-2]\n "

rows = 3

cols = 3

n = rows*cols

fig, axes = plt.subplots(rows, cols, figsize=(10, 10))

for i, (spectrogram, label_id) in enumerate(spectrogram_train_ds.take(n)):

r = i // cols

c = i % cols

ax = axes[r][c]

plot_spectrogram(spectrogram.numpy(), ax)

ax.set_title(commands[label_id.numpy()])

ax.axis('off')

plt.show()

def preprocess_dataset(files):

files_ds = tf.data.Dataset.from_tensor_slices(files)

ss_ds = files_ds.map( map_func=get_waveform_and_label)

sp=[]

lb=[]

for waveform, label in ss_ds:

spectrogram,lab = get_spectrogram_and_label_id(waveform,label)

sp.append( spectrogram )

lb.append( lab)

output_ds = tf.data.Dataset.from_tensor_slices((sp, lb))

return output_ds

'''train_ds = spectrogram_ds

val_ds = preprocess_dataset(val_files)

test_ds = preprocess_dataset(test_files)

'''

'train_ds = spectrogram_ds\nval_ds = preprocess_dataset(val_files)\ntest_ds = preprocess_dataset(test_files)\n'

batch_size = 4

train_ds = spectrogram_train_ds.batch(batch_size)

val_ds = spectrogram_val_ds.batch(batch_size)

#train_ds = train_ds.cache().prefetch(AUTOTUNE)

#val_ds = val_ds.cache().prefetch(AUTOTUNE)

for spectrogram, _ in spectrogram_train_ds.take(1):

input_shape = spectrogram.shape

print('Input shape:', input_shape)

num_labels = len(commands)

# Instantiate the `tf.keras.layers.Normalization` layer.

#norm_layer = layers.Normalization()

# Fit the state of the layer to the spectrograms

# with `Normalization.adapt`.

#norm_layer.adapt(data=spectrogram_ds.map(map_func=lambda spec, label: spec))

model = models.Sequential([

layers.Input(shape=input_shape),

# Downsample the input.

#layers.Resizing(32, 32),

# Normalize.

#norm_layer,

layers.Conv2D(32, 3, activation='relu'),

layers.Conv2D(64, 3, activation='relu'),

layers.MaxPooling2D(),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(128, activation='relu'),

layers.Dropout(0.5),

layers.Dense(num_labels),

])

model.summary()

Input shape: (29, 32, 1) Model: "sequential_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_1 (Conv2D) (None, 27, 30, 32) 320 _________________________________________________________________ conv2d_2 (Conv2D) (None, 25, 28, 64) 18496 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 12, 14, 64) 0 _________________________________________________________________ dropout_2 (Dropout) (None, 12, 14, 64) 0 _________________________________________________________________ flatten_1 (Flatten) (None, 10752) 0 _________________________________________________________________ dense_1 (Dense) (None, 128) 1376384 _________________________________________________________________ dropout_3 (Dropout) (None, 128) 0 _________________________________________________________________ dense_2 (Dense) (None, 5) 645 ================================================================= Total params: 1,395,845 Trainable params: 1,395,845 Non-trainable params: 0 _________________________________________________________________

model.compile(

optimizer=tf.keras.optimizers.Adam(),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'],

)

EPOCHS = 10

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=EPOCHS,

callbacks=tf.keras.callbacks.EarlyStopping(verbose=1, patience=2),

)

Epoch 1/10 30/30 [==============================] - 1s 18ms/step - loss: 1.6034 - accuracy: 0.3000 - val_loss: 1.2306 - val_accuracy: 0.6250 Epoch 2/10 30/30 [==============================] - 0s 15ms/step - loss: 1.0152 - accuracy: 0.6417 - val_loss: 0.5127 - val_accuracy: 0.9000 Epoch 3/10 30/30 [==============================] - 0s 15ms/step - loss: 0.7134 - accuracy: 0.7417 - val_loss: 0.2683 - val_accuracy: 0.9583 Epoch 4/10 30/30 [==============================] - 0s 15ms/step - loss: 0.4264 - accuracy: 0.8500 - val_loss: 0.1414 - val_accuracy: 0.9750 Epoch 5/10 30/30 [==============================] - 0s 15ms/step - loss: 0.2500 - accuracy: 0.9333 - val_loss: 0.0650 - val_accuracy: 0.9917 Epoch 6/10 30/30 [==============================] - 0s 15ms/step - loss: 0.2363 - accuracy: 0.9333 - val_loss: 0.0840 - val_accuracy: 0.9833 Epoch 7/10 30/30 [==============================] - 0s 15ms/step - loss: 0.2068 - accuracy: 0.9333 - val_loss: 0.0527 - val_accuracy: 0.9917 Epoch 8/10 30/30 [==============================] - 0s 16ms/step - loss: 0.1811 - accuracy: 0.9500 - val_loss: 0.0292 - val_accuracy: 0.9917 Epoch 9/10 30/30 [==============================] - 0s 15ms/step - loss: 0.1015 - accuracy: 0.9750 - val_loss: 0.0261 - val_accuracy: 1.0000 Epoch 10/10 30/30 [==============================] - 0s 15ms/step - loss: 0.1344 - accuracy: 0.9667 - val_loss: 0.0174 - val_accuracy: 1.0000

metrics = history.history

plt.plot(history.epoch, metrics['loss'], metrics['val_loss'])

plt.legend(['loss', 'val_loss'])

plt.show()

test_audio = []

test_labels = []

for audio, label in spectrogram_test_ds:

test_audio.append(audio.numpy())

test_labels.append(label.numpy())

test_audio = np.array(test_audio)

test_labels = np.array(test_labels)

y_pred = np.argmax(model.predict(test_audio), axis=1)

y_true = test_labels

test_acc = sum(y_pred == y_true) / len(y_true)

print(f'Test set accuracy: {test_acc:.0%}')

Test set accuracy: 100%

confusion_mtx = tf.math.confusion_matrix(y_true, y_pred)

plt.figure(figsize=(10, 8))

sns.heatmap(confusion_mtx,

xticklabels=commands,

yticklabels=commands,

annot=True, fmt='g')

plt.xlabel('Prediction')

plt.ylabel('Label')

plt.show()

sample_file = data_dir/'stop/stop10.raw'

sample_ds = preprocess_dataset([str(sample_file)])

for spectrogram, label in sample_ds.batch(1):

prediction = model(spectrogram)

plt.bar(commands, tf.nn.softmax(prediction[0]))

plt.title(f'Predictions for "{commands[label[0]]}"')

plt.show()

Tensor("args_0:0", shape=(), dtype=string)

Generate a TensorFlow Lite for Microcontrollers Model ¶

Convert the TensorFlow Lite quantized model into a C source file that can be loaded by TensorFlow Lite for Microcontrollers.

model.save('model.save')

#new_model = tf.keras.models.load_model('sound01.model')

# Check its architecture

# new_model.summary()

# Convert the model

converter = tf.lite.TFLiteConverter.from_saved_model('model.save') # path to the SavedModel directory

tflite_model = converter.convert()

# Save the model.

with open('model.tflite', 'wb') as f:

f.write(tflite_model)

2022-04-02 20:45:24.708181: W tensorflow/python/util/util.cc:368] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

INFO:tensorflow:Assets written to: model.save/assets

2022-04-02 20:45:25.308771: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:357] Ignored output_format. 2022-04-02 20:45:25.308807: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:360] Ignored drop_control_dependency.

MODEL_TFLITE='model.tflite'

MODEL_TFLITE_MICRO='modelm.c'

# Install xxd if it is not available

#!apt-get update && apt-get -qq install xxd

#!yum install vim-common (with root)

# Convert to a C source file, i.e, a TensorFlow Lite for Microcontrollers model

!xxd -i {MODEL_TFLITE} > {MODEL_TFLITE_MICRO}

# Update variable names

REPLACE_TEXT = MODEL_TFLITE.replace('/', '_').replace('.', '_')

!sed -i 's/'{REPLACE_TEXT}'/g_model/g' {MODEL_TFLITE_MICRO}

or using tinymlgen ¶

MODEL_TFLITE_MICRO='modelm2.cc'

from tinymlgen import port

with open(MODEL_TFLITE_MICRO, 'w') as f: # change path if needed

f.write(port(model, optimize=False))

INFO:tensorflow:Assets written to: /tmp/tmps28236dg/assets

2022-04-02 20:45:31.304939: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:357] Ignored output_format. 2022-04-02 20:45:31.304979: W tensorflow/compiler/mlir/lite/python/tf_tfl_flatbuffer_helpers.cc:360] Ignored drop_control_dependency.

!ls -l modelm*

-rw-r--r-- 1 im267926 idediplis 39024113 Apr 2 20:45 modelm2.cc -rw-r--r-- 1 im267926 idediplis 40107796 Apr 2 20:45 modelm.c

'''

model = Sequential()

model.add(layers.Dense(data.shape[1], activation='relu', input_shape=(data.shape[1],)))

model.add(layers.Dropout(0.25))

model.add(layers.Dense(np.unique(target).size * 4, activation='relu'))

model.add(layers.Dropout(0.25))

model.add(layers.Dense(np.unique(target).size, activation='softmax'))

'''

for spectrogram, _ in spectrogram_train_ds.take(1):

input_shape = spectrogram.shape

print('Input shape:', input_shape)

num_labels = len(commands)

# Instantiate the `tf.keras.layers.Normalization` layer.

#norm_layer = layers.Normalization()

# Fit the state of the layer to the spectrograms

# with `Normalization.adapt`.

#norm_layer.adapt(data=spectrogram_train_ds.map(map_func=lambda spec, label: spec))

modelr = models.Sequential([

#norm_layer,

layers.Input(shape=input_shape),

# Downsample the input.

#layers.Resizing(32, 32),

# Normalize.

#norm_layer,

layers.Conv2D(16, 3, activation='relu'),

#layers.Conv2D(64, 3, activation='relu'),

layers.MaxPooling2D(),

layers.Dropout(0.25),

layers.Flatten(),

#layers.Dense(32, activation='relu'),

layers.Dropout(0.5),

layers.Dense(num_labels),

])

modelr.compile(

optimizer=tf.keras.optimizers.Adam(),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'],

)

modelr.summary()

Input shape: (29, 32, 1) Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d (Conv2D) (None, 27, 30, 16) 160 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 13, 15, 16) 0 _________________________________________________________________ dropout (Dropout) (None, 13, 15, 16) 0 _________________________________________________________________ flatten (Flatten) (None, 3120) 0 _________________________________________________________________ dropout_1 (Dropout) (None, 3120) 0 _________________________________________________________________ dense (Dense) (None, 5) 15605 ================================================================= Total params: 15,765 Trainable params: 15,765 Non-trainable params: 0 _________________________________________________________________

modelr.compile(

optimizer=tf.keras.optimizers.Adam(),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'],

)

EPOCHS = 100

history = modelr.fit(

train_ds,

validation_data=val_ds,

epochs=EPOCHS,

callbacks=tf.keras.callbacks.EarlyStopping(verbose=1, patience=2),

)

Epoch 1/100 30/30 [==============================] - 0s 5ms/step - loss: 353.1902 - accuracy: 0.2750 - val_loss: 85.3436 - val_accuracy: 0.4833 Epoch 2/100 30/30 [==============================] - 0s 2ms/step - loss: 247.6583 - accuracy: 0.3917 - val_loss: 86.5176 - val_accuracy: 0.6000 Epoch 3/100 30/30 [==============================] - 0s 2ms/step - loss: 171.9676 - accuracy: 0.4917 - val_loss: 29.0585 - val_accuracy: 0.7417 Epoch 4/100 30/30 [==============================] - 0s 2ms/step - loss: 81.0210 - accuracy: 0.6083 - val_loss: 34.8178 - val_accuracy: 0.7250 Epoch 5/100 30/30 [==============================] - 0s 2ms/step - loss: 46.7976 - accuracy: 0.6583 - val_loss: 9.1939 - val_accuracy: 0.8667 Epoch 6/100 30/30 [==============================] - 0s 2ms/step - loss: 38.3560 - accuracy: 0.6917 - val_loss: 6.6355 - val_accuracy: 0.8750 Epoch 7/100 30/30 [==============================] - 0s 2ms/step - loss: 31.7097 - accuracy: 0.6917 - val_loss: 0.8971 - val_accuracy: 0.9583 Epoch 8/100 30/30 [==============================] - 0s 2ms/step - loss: 18.9519 - accuracy: 0.7500 - val_loss: 1.1611 - val_accuracy: 0.9583 Epoch 9/100 30/30 [==============================] - 0s 2ms/step - loss: 24.4846 - accuracy: 0.7917 - val_loss: 2.4979 - val_accuracy: 0.9500 Epoch 00009: early stopping

metrics = history.history

plt.plot(history.epoch, metrics['loss'], metrics['val_loss'])

plt.legend(['loss', 'val_loss'])

plt.show()

test_audio = []

test_labels = []

for audio, label in spectrogram_test_ds:

test_audio.append(audio.numpy())

test_labels.append(label.numpy())

test_audio = np.array(test_audio)

test_labels = np.array(test_labels)

y_pred = np.argmax(modelr.predict(test_audio), axis=1)

y_true = test_labels

test_acc = sum(y_pred == y_true) / len(y_true)

print(f'Test set accuracy: {test_acc:.0%}')

Test set accuracy: 95%

confusion_mtx = tf.math.confusion_matrix(y_true, y_pred)

plt.figure(figsize=(10, 8))

sns.heatmap(confusion_mtx,

xticklabels=commands,

yticklabels=commands,

annot=True, fmt='g')

plt.xlabel('Prediction')

plt.ylabel('Label')

plt.show()

sample_file = data_dir/'avance/avance10.raw'

sample_ds = preprocess_dataset([str(sample_file)])

for spectrogram, label in sample_ds.batch(1):

prediction = modelr(spectrogram)

plt.bar(commands, tf.nn.softmax(prediction[0]))

plt.title(f'Predictions for "{commands[label[0]]}"')

plt.show()

Tensor("args_0:0", shape=(), dtype=string)

print(prediction.numpy())

tf.nn.softmax(prediction.numpy())

[[ 62.3935 -176.44669 -87.4562 -182.80154 36.055046]]

<tf.Tensor: shape=(1, 5), dtype=float32, numpy=

array([[1.00000e+00, 0.00000e+00, 0.00000e+00, 0.00000e+00, 3.64212e-12]],

dtype=float32)>

prediction[0]

<tf.Tensor: shape=(5,), dtype=float32, numpy=

array([ 62.3935 , -176.44669 , -87.4562 , -182.80154 , 36.055046],

dtype=float32)>

modelr.save('modelr.save')

#new_model = tf.keras.models.load_model('sound01.model')

# Check its architecture

# new_model.summary()

# Convert the model

#converter = tf.lite.TFLiteConverter.from_saved_model('modelr.save') # path to the SavedModel directory

converter = tf.lite.TFLiteConverter.from_saved_model('modelr.save')

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

tflite_model = converter.convert()

# Save the model.

with open('modelr.tflite', 'wb') as f:

f.write(tflite_model)

INFO:tensorflow:Assets written to: modelr.save/assets

INFO:tensorflow:Assets written to: modelr.save/assets 2022-04-03 21:08:42.026821: I tensorflow/core/grappler/devices.cc:55] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2022-04-03 21:08:42.026893: I tensorflow/core/grappler/clusters/single_machine.cc:356] Starting new session 2022-04-03 21:08:42.028249: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:797] Optimization results for grappler item: graph_to_optimize 2022-04-03 21:08:42.028259: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] function_optimizer: Graph size after: 29 nodes (22), 39 edges (32), time = 0.512ms. 2022-04-03 21:08:42.028263: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] function_optimizer: function_optimizer did nothing. time = 0.012ms. 2022-04-03 21:08:42.042795: I tensorflow/core/grappler/devices.cc:55] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2022-04-03 21:08:42.042844: I tensorflow/core/grappler/clusters/single_machine.cc:356] Starting new session 2022-04-03 21:08:42.045023: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:797] Optimization results for grappler item: graph_to_optimize 2022-04-03 21:08:42.045034: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] constant_folding: Graph size after: 25 nodes (-4), 31 edges (-8), time = 1.044ms. 2022-04-03 21:08:42.045037: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] constant_folding: Graph size after: 25 nodes (0), 31 edges (0), time = 0.279ms.

MODEL_TFLITE='modelr.tflite'

MODEL_TFLITE_MICRO='modelmr.c'

# Install xxd if it is not available

#!apt-get update && apt-get -qq install xxd

#!yum install vim-common (with root)

# Convert to a C source file, i.e, a TensorFlow Lite for Microcontrollers model

!xxd -i {MODEL_TFLITE} > {MODEL_TFLITE_MICRO}

# Update variable names

REPLACE_TEXT = MODEL_TFLITE.replace('/', '_').replace('.', '_')

!sed -i 's/'{REPLACE_TEXT}'/g_model/g' {MODEL_TFLITE_MICRO}

MODEL_TFLITE_MICRO='modelr2.cpp'

from tinymlgen import port

with open(MODEL_TFLITE_MICRO, 'w') as f: # change path if needed

f.write(port(modelr, optimize=False))

2022-04-03 21:08:43.935395: I tensorflow/core/grappler/devices.cc:55] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2022-04-03 21:08:43.935514: I tensorflow/core/grappler/clusters/single_machine.cc:356] Starting new session 2022-04-03 21:08:43.936257: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:797] Optimization results for grappler item: graph_to_optimize 2022-04-03 21:08:43.936267: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] function_optimizer: function_optimizer did nothing. time = 0.001ms. 2022-04-03 21:08:43.936270: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] function_optimizer: function_optimizer did nothing. time = 0.001ms. 2022-04-03 21:08:43.947840: I tensorflow/core/grappler/devices.cc:55] Number of eligible GPUs (core count >= 8, compute capability >= 0.0): 0 2022-04-03 21:08:43.947890: I tensorflow/core/grappler/clusters/single_machine.cc:356] Starting new session 2022-04-03 21:08:43.949642: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:797] Optimization results for grappler item: graph_to_optimize 2022-04-03 21:08:43.949652: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] constant_folding: Graph size after: 16 nodes (-4), 15 edges (-4), time = 0.772ms. 2022-04-03 21:08:43.949656: I tensorflow/core/grappler/optimizers/meta_optimizer.cc:799] constant_folding: Graph size after: 16 nodes (0), 15 edges (0), time = 0.21ms.

!ls -ltr model*

-rw-r--r-- 1 im267926 idediplis 61 30 mars 13:12 model.cc -rw-r--r-- 1 im267926 idediplis 39024665 30 mars 13:14 modelv.cc -rw-r--r-- 1 im267926 idediplis 6504048 30 mars 13:29 model.tfl -rw-r--r-- 1 im267926 idediplis 6503956 2 avril 20:45 model.tflite -rw-r--r-- 1 im267926 idediplis 40107796 2 avril 20:45 modelm.c -rw-r--r-- 1 im267926 idediplis 39024113 2 avril 20:45 modelm2.cc -rw-r--r-- 1 im267926 idediplis 36912 3 avril 21:08 modelr.tflite -rw-r--r-- 1 im267926 idediplis 227688 3 avril 21:08 modelmr.c -rw-r--r-- 1 im267926 idediplis 417639 3 avril 21:08 modelr2.cpp models: total 64 -rw-r--r-- 1 im267926 idediplis 1338 22 déc. 11:35 CODEOWNERS -rw-r--r-- 1 im267926 idediplis 337 22 déc. 11:35 AUTHORS -rw-r--r-- 1 im267926 idediplis 1115 22 déc. 11:35 ISSUES.md -rw-r--r-- 1 im267926 idediplis 390 22 déc. 11:35 CONTRIBUTING.md -rw-r--r-- 1 im267926 idediplis 2604 22 déc. 11:35 README.md -rw-r--r-- 1 im267926 idediplis 11405 22 déc. 11:35 LICENSE drwxr-xr-x 2 im267926 idediplis 23 22 déc. 11:35 community drwxr-xr-x 13 im267926 idediplis 266 22 déc. 11:35 official drwxr-xr-x 5 im267926 idediplis 222 22 déc. 11:35 orbit drwxr-xr-x 4 im267926 idediplis 98 22 déc. 11:35 tensorflow_models drwxr-xr-x 24 im267926 idediplis 4096 22 déc. 12:09 research -rw-r--r-- 1 im267926 idediplis 323 7 janv. 15:00 01.txt drwxr-xr-x 4 im267926 idediplis 84 18 mars 13:31 model -rw-r--r-- 1 im267926 idediplis 2932 18 mars 13:33 model_no_quant.tflite -rw-r--r-- 1 im267926 idediplis 2408 18 mars 13:33 model.tflite -rw-r--r-- 1 im267926 idediplis 14913 18 mars 13:37 model.cc model.save: total 180 drwxr-xr-x 2 im267926 idediplis 10 30 mars 13:28 assets drwxr-xr-x 2 im267926 idediplis 78 2 avril 20:45 variables -rw-r--r-- 1 im267926 idediplis 162661 2 avril 20:45 saved_model.pb -rw-r--r-- 1 im267926 idediplis 17339 2 avril 20:45 keras_metadata.pb modelr.save: total 120 drwxr-xr-x 2 im267926 idediplis 10 3 avril 10:53 assets -rw-r--r-- 1 im267926 idediplis 13952 3 avril 16:48 keras_metadata.pb drwxr-xr-x 2 im267926 idediplis 78 3 avril 21:08 variables -rw-r--r-- 1 im267926 idediplis 106432 3 avril 21:08 saved_model.pb

#include <stdlib.h>

#include <stdint.h>

#include <stdio.h>

#include <math.h>

#include <assert.h>

#include "esp_task_wdt.h"

#include <TensorFlowLite_ESP32.h>

#include "main_functions.h"

#include "constants.h"

#include "output_handler.h"

#include "sine_model_data.h"

#include "tensorflow/lite/experimental/micro/kernels/all_ops_resolver.h"

#include "tensorflow/lite/experimental/micro/micro_error_reporter.h"

#include "tensorflow/lite/experimental/micro/micro_interpreter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

#include "tensorflow/lite/experimental/micro/kernels/micro_ops.h"

#include "tensorflow/lite/experimental/micro/micro_mutable_op_resolver.h"

// Globals, used for compatibility with Arduino-style sketches.

namespace {

tflite::ErrorReporter* error_reporter = nullptr;

const tflite::Model* model = nullptr;

tflite::MicroInterpreter* interpreter = nullptr;

TfLiteTensor* input = nullptr;

TfLiteTensor* output = nullptr;

int inference_count = 0;

// Create an area of memory to use for input, output, and intermediate arrays.

// Finding the minimum value for your model may require some trial and error.

constexpr int kTensorArenaSize = 70 * 1024;

uint8_t tensor_arena[kTensorArenaSize];

} // namespace

// The name of this function is important for Arduino compatibility.

#define PinSound 32

unsigned long intervalMicros = 250; //us

unsigned long nextMicros = 0;

int sending;

float spectro[30*32];

void setup() {

Serial.begin(115200);

// Set up logging. Google style is to avoid globals or statics because of

// lifetime uncertainty, but since this has a trivial destructor it's okay.

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::MicroErrorReporter micro_error_reporter;

error_reporter = µ_error_reporter;

// Map the model into a usable data structure. This doesn't involve any

// copying or parsing, it's a very lightweight operation.

model = tflite::GetModel(g_sine_model_data);

if (model->version() != TFLITE_SCHEMA_VERSION) {

error_reporter->Report(

"Model provided is schema version %d not equal "

"to supported version %d.",

model->version(), TFLITE_SCHEMA_VERSION);

return;

}

// Pull in only the operation implementations we need.

// This relies on a complete list of all the ops needed by this graph.

// An easier approach is to just use the AllOpsResolver, but this will

// incur some penalty in code space for op implementations that are not

// needed by this graph.

//

// tflite::ops::micro::AllOpsResolver resolver;

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::MicroMutableOpResolver micro_mutable_op_resolver;

micro_mutable_op_resolver.AddBuiltin(

tflite::BuiltinOperator_DEPTHWISE_CONV_2D,

tflite::ops::micro::Register_DEPTHWISE_CONV_2D());

micro_mutable_op_resolver.AddBuiltin(

tflite::BuiltinOperator_FULLY_CONNECTED,

tflite::ops::micro::Register_FULLY_CONNECTED());

micro_mutable_op_resolver.AddBuiltin(tflite::BuiltinOperator_SOFTMAX,

tflite::ops::micro::Register_SOFTMAX());

// This pulls in all the operation implementations we need.

// NOLINTNEXTLINE(runtime-global-variables)

static tflite::ops::micro::AllOpsResolver resolver;

// Build an interpreter to run the model with.

static tflite::MicroInterpreter static_interpreter(

model, resolver, tensor_arena, kTensorArenaSize, error_reporter);

interpreter = &static_interpreter;

// Allocate memory from the tensor_arena for the model's tensors.

TfLiteStatus allocate_status = interpreter->AllocateTensors();

if (allocate_status != kTfLiteOk) {

error_reporter->Report("AllocateTensors() failed");

return;

}

// Obtain pointers to the model's input and output tensors.

input = interpreter->input(0);

output = interpreter->output(0);

// Keep track of how many inferences we have performed.

inference_count = 0;

Serial.println(input->dims->size);

Serial.println(input->type);

xTaskCreatePinnedToCore(

loop2,

"loop2", // Task name

10000, // Stack size (bytes)

NULL, // Parameter

10, // Task priority

NULL, // Task handle

0

);

esp_task_wdt_init(1800,false);

esp_task_wdt_add(NULL);

nextMicros = micros();

Serial.println("Ok");

}

// The name of this function is important for Arduino compatibility.

int infer=0;

void loop()

{

if(infer==1)

{

infer=0;

// Place our calculated x value in the model's input tensor

memcpy(input->data.i32,spectro,sizeof(spectro));

// Run inference, and report any error

TfLiteStatus invoke_status = interpreter->Invoke();

if (invoke_status != kTfLiteOk) {

error_reporter->Report("Invoke failed on ");

return;

}

// display output

double sfm[5];

size_t n = sizeof(sfm) / sizeof(double);

// Serial.println(n);

for(int i=0;i<n;i++)

sfm[i]=(double) output->data.f[i];

softmax(sfm, n);

float max=0;

int indice=-1;

for(int i=0;i<5;i++)

{

Serial.print(sfm[i]); Serial.print(" ");

if(sfm[i] > max )

{

max=sfm[i] ;

indice=i;

}

}

if(indice>=0 && max > 0.5)

{

switch(indice)

{

case 0:

Serial.print(" avance ");

break;

case 1:

Serial.print(" recule ");

break;

case 2:

Serial.print(" stop ");

break;

case 3:

Serial.print(" droite ");

break;

case 4:

Serial.print(" gauche ");

break;

}

}

Serial.println(millis()/1000);

}

}

/*

// Read the predicted y value from the model's output tensor

float y_val = output->data.f[0];

// Output the results. A custom HandleOutput function can be implemented

// for each supported hardware target.

HandleOutput(error_reporter, x_val, y_val);

// Increment the inference_counter, and reset it if we have reached

// the total number per cycle

inference_count += 1;

if (inference_count >= kInferencesPerCycle) inference_count = 0;

}*/

long soutbuf[2024];

int outbuf=0;

void loop2(void * parameter) {

for(;;){

unsigned long currentMicros = micros();

if(currentMicros >= nextMicros )

{

nextMicros = currentMicros + intervalMicros;

int level = analogRead(PinSound);

level = map(level, 0, 4095, 0, 255);

if(outbuf>=2024)

{

// Serial.println("outbuf 0");

outbuf=0;

computespec();

infer=1;

//displayspec();

}

soutbuf[outbuf]=level;

outbuf++;

}

}

}

void computespec()

{

for(int step=0;step<30;step++)

{

// step de 128 offline de 64

// imff(int32_t *in,int32_t N,int32_t *out)

imff(soutbuf+step*64, 128, spectro+step*32);

}

}

void displayspec()

{

for(int step=0;step<30;step++)

{

Serial.println("");

for(int freq=0;freq<32;freq++)

{

Serial.print(" "); Serial.print(spectro[step*30+freq]); }

}

}

void softmax(double* input, size_t size) {

// assert(0 <= size <= sizeof(input) / sizeof(double));

int i;

double m, sum, constant;

m = -INFINITY;

for (i = 0; i < size; ++i) {

if (m < input[i]) {

m = input[i];

}

}

sum = 0.0;

for (i = 0; i < size; ++i) {

sum += exp(input[i] - m);

}

constant = m + log(sum);

for (i = 0; i < size; ++i) {

input[i] = exp(input[i] - constant);

}

}

output samples¶